As 5G and mobile-access edge computing brings processing power closer to the action, technologies like computer vision (a subset of Artificial Intelligence) can be leveraged for safety, sustainability, and security in smart applications. While centralized cloud computing makes it easier to process data at scale, there are times when it doesn’t make sense to send data off to the cloud for real-time situations such as autonomous vehicles and industrial manufacturing.

We spoke with Emrah Gultekin, CEO of Chooch AI, an artificial intelligence trailblazer with expertise in computer vision, to get a better understanding of real-world use cases and the potential for life-changing applications in the next few decades.

Q: Please introduce yourself.

Emrah: I was born in Istanbul, Turkey, grew up in the U.S. and Saudi Arabia, and spent a lot of time in different countries. I grew up in Generation X, a very sci-fi-driven generation. I got my first Apple IIe computer in 1985 and started coding. I was also an avid swimmer, which got me through boarding school and college and kept me out of trouble at the same time.

It’s been an interesting ride experiencing different cultures, consuming and living the technology that we talk about today. I’ve been involved in many businesses. This is my seventh company; some of them failures, some of them successes.

My passion since childhood has been to fuse social sciences with business and technology to create businesses that have good impacts on human wellbeing.

Q: We really resonate with you there. What got you into artificial intelligence and what was the inspiration to start a company around artificial intelligence?

Emrah: You get into things such as artificial intelligence because you have some foresight on what the next evolution of the technology is going to be. That’s the case for us at Chooch AI.

My CTO and my co-founder Hakan, developed a program for healthcare professionals to quickly diagnose radiology and imagery coming from different types of sensors very quickly – especially in Asia, where a doctor has to diagnose around 100 people a day as opposed to maybe 10 people here. We wondered if we could take that, generalize it, and make it available to the general public.

That was the push, but the funny thing is, we didn’t know where it was going to lead. It is a type of blind courage. The market and this entire ecosystem kept pushing us forward.

Q: Sounds like an organic market push and demand for Chooch AI to expand as you keep moving forward.

Emrah: Exactly.

It’s incremental and done in little steps. That’s how entrepreneurs work. Then, you have a big step.

Still today, there are lots of fundamental challenges with scaling this type of AI. It’s an opportunity for companies like ours who can solve these issues. It’s akin to the Internet in the mid 90s.

Today, we call it AI, but it’s just a better way of doing statistics and calculation. It’s a superior machine, basically.

Q: We’ve been hearing about artificial intelligence for a really long time now. It’s one of those things that people are always questioning. Should people be scared of artificial intelligence? What should people understand about it at the initial level?

Emrah: The misnomer is that we semantically made a great mistake by calling it “artificial intelligence”. There’s nothing artificial about intelligence. If we called it a type of supercomputer, we would be less inclined to think that we can replace humans that easily.

AI isn’t replacing humans. It’s aiding humans to do their jobs far better.

We must educate the workforce in a way where new jobs are being filled to support the technology. Who’s going to train the AI? Humans.

Q: What is computer vision and what are the type of real-world applications where you’re seeing it used?

Emrah: Computer vision is an important part of AI. If you’re trying to copy human intelligence, over 80% of information processed and stored in the brain is visual. If you translate that into machines, it should be around the same percentage, depending on the use case.

The grand theme here is to copy that visual intelligence, i.e. detection of actions, objects, states, and so forth. That will probably continue to be built on for the next 20 years.

There are three different buckets to visual detection and for all AI. As a platform, we’ve bundled them together:

- Data set regeneration (data collection and generation): creating a data set for the AI to learn what you’ve annotated.

- Training the model based on the data set you’ve collected using deep neural networks.

- Inferencing: figuring out where to push that data, how to get new information coming into the system, and how the model can predict on new information.

Then, cycle back to data set collection. The cycle is really crucial.

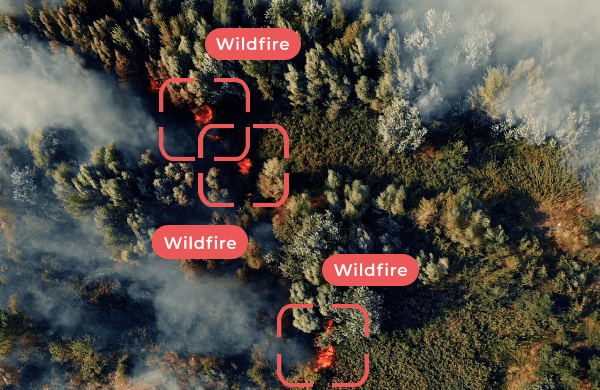

Examples of real-world applications are detecting if someone fell in a nursing home or in a public space, if workers have their hard hats on, locations of wildfires in a dense forest, demographics of people checking into a hotel or retail space, detecting objects people are picking up, license plate reading, etc.

You have components of these all over the place. Vantiq and Chooch AI make it possible for it to happen in one platform, working in one entire system.

Q: What challenges do you see in real-world applications and what do you need for this to actually happen?

Emrah: One of the great breakthroughs over the past couple of years has been the newfound ability to put the inference engines onto devices – on the edge or on prem.

For video feeds, if you’re doing 24/7 monitoring of a space, drone, business, or manufacturing site, that feed needs to be constantly inferenced which causes a lot of friction, if you will. One of the things that greatly minimizes that friction is putting these inference engines onto the edge. That is going to accelerate the adoption of these systems.

There are privacy concerns for all this data going into different clouds or areas. One reason we’ve put everything onto the edge is for the customer to own all of that downstream inferencing and nothing shows up on the cloud, for the most part.

Getting people to start using these systems is a challenge because they’re not really replacing them with another system that they already have. We’re creating a new thing for them to adopt.

Q: We often hear the phrase, “it takes a village”. It really does take a village or an ecosystem of players for a transformative solution. How do you see the ecosystem play and partnership between Chooch AI and Vantiq to accelerate real-world applications for computer vision?

Emrah: We’re excited to leverage Vantiq’s capabilities to accelerate the adoption of visual intelligence-based smart applications. Vantiq’s super low-latency, full applications development lifecycle combined with Chooch AI’s intelligent computer vision makes for a very exciting partnership. These applications can sense, analyze, and act on complex problems, such as floods, traffic accidents, factory breakdowns, or symptomatic people entering a corporate lobby, all in real time.

We have to say Vantiq’s team is very, very intelligent. I don’t think we’ve met a team so sharp yet. The entire ecosystem is putting us together in a way where we are able to push transformative products and efficiencies to the enterprise level.

AI is like saying ‘internet’ in the 90s; it’s 100, 200 players doing all kinds of stuff, maybe even more. Our teams focus on things that we do well and we make sure that the integration is smooth, so that these applications are up and running as quickly as possible.

We strive to continue building this ecosystem together so that we can provide these types of products and tools for innovation.

—

Register for our webinar with Chooch AI April 7, 2021, Implementing Computer Vision Applications in the Real World.